Quick summary

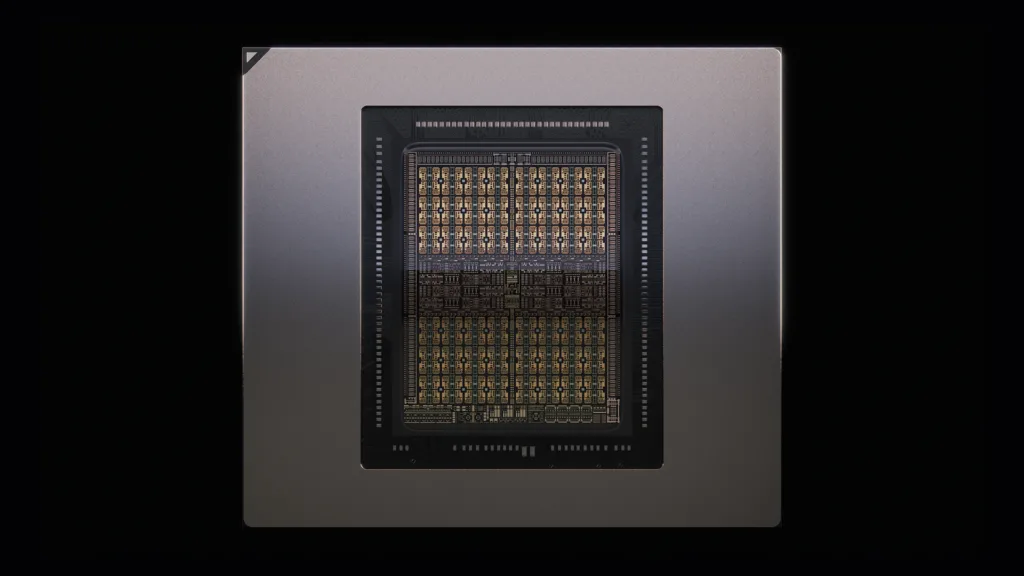

The Rubin CPX is a new, purpose-built class of NVIDIA GPU that targets the heavy “context” phase of AI inference — the step where models ingest and reason over extremely large inputs (millions of tokens) to produce the first output token. NVIDIA positions the Rubin CPX as part of a disaggregated inference architecture that separates context processing from token generation for greater efficiency and scale.

Key specifications & claims

- Compute: ~30 petaFLOPs of NVFP4 performance and ~3× attention acceleration vs. NVIDIA’s previous GB300 NVL72 design.

- Memory: 128 GB of GDDR7 on the Rubin CPX device (fast context memory optimized for million-token workloads).

- Role in stack: Designed to run the “context” phase; pairs with Rubin GPUs and NVIDIA Vera CPUs that handle the “generation” phase in the same rack architecture.

- Rack platform: The Vera Rubin NVL144 CPX rack integrates 144 Rubin CPX GPUs, 144 Rubin GPUs and 36 Vera CPUs — NVIDIA claims this configuration delivers ~8 exaFLOPs of NVFP4 compute (≈7.5× the GB300 NVL72).

- Use cases: Large-scale software development (million-token code reasoning), high-speed generative video, and other workloads that must reason across extremely long context windows.

- Availability / timeline: NVIDIA states Rubin CPX and the Vera Rubin NVL144 CPX platform are expected for general availability in late 2026 (roadmapped with Rubin architecture products manufactured at TSMC 3 nm).

Why NVIDIA built a separate “context” GPU

As models grow to handle millions of tokens, the initial context pass (reading, embedding, attention over huge inputs) becomes extremely compute- and memory-heavy but has different bottlenecks than the token-generation phase. By disaggregating inference — dedicating Rubin CPX for context and Rubin GPUs/Vera CPUs for generation — NVIDIA says customers get more efficient scaling, lower latency for long-context workloads, and better cost-of-serving for very large models.

Additional context from industry reporting

Coverage from DatacenterDynamics, Forbes, CRN and others confirms the main claims (Rubin CPX purpose, NVL144 CPX rack design, NVFP4 usage and a 2026 timetable). Some articles also highlight NVIDIA’s broader Rubin roadmap (Rubin → Rubin Ultra, related Vera CPUs, and next-gen packaging and interconnect advances). Competitive comparisons (e.g., AMD plans) appear in parallel reporting as vendors race to deliver rack-scale FP4/FP8 infrastructure.

Limitations & what to watch

- Specifications like sustained real-world throughput, power draw, and customer benchmarks will only be verifiable when systems are in customers’ hands or third-party tests appear. NVIDIA’s promotional numbers reflect peak/nominal metrics.

- Availability is projected for late 2026; roadmaps can shift due to silicon bring-up, supply chain, or validation work. Independent reviews and cloud provider deployments will be key signals to confirm real performance.

Bottom line

NVIDIA’s Rubin CPX is explicitly aimed at a new problem class — massive-context AI inference — and introduces a disaggregated rack architecture (Vera Rubin NVL144 CPX) to scale context processing separately from generation. If validated in the field, this could materially reduce the cost and latency of million-token applications (large codebases, long video, multi-document reasoning) while reshaping how data-centers assemble inference fleets.

Sources: NVIDIA announcement and press materials; DatacenterDymanics coverage; Forbes analysis; CRN and Tom’s Hardware reporting.